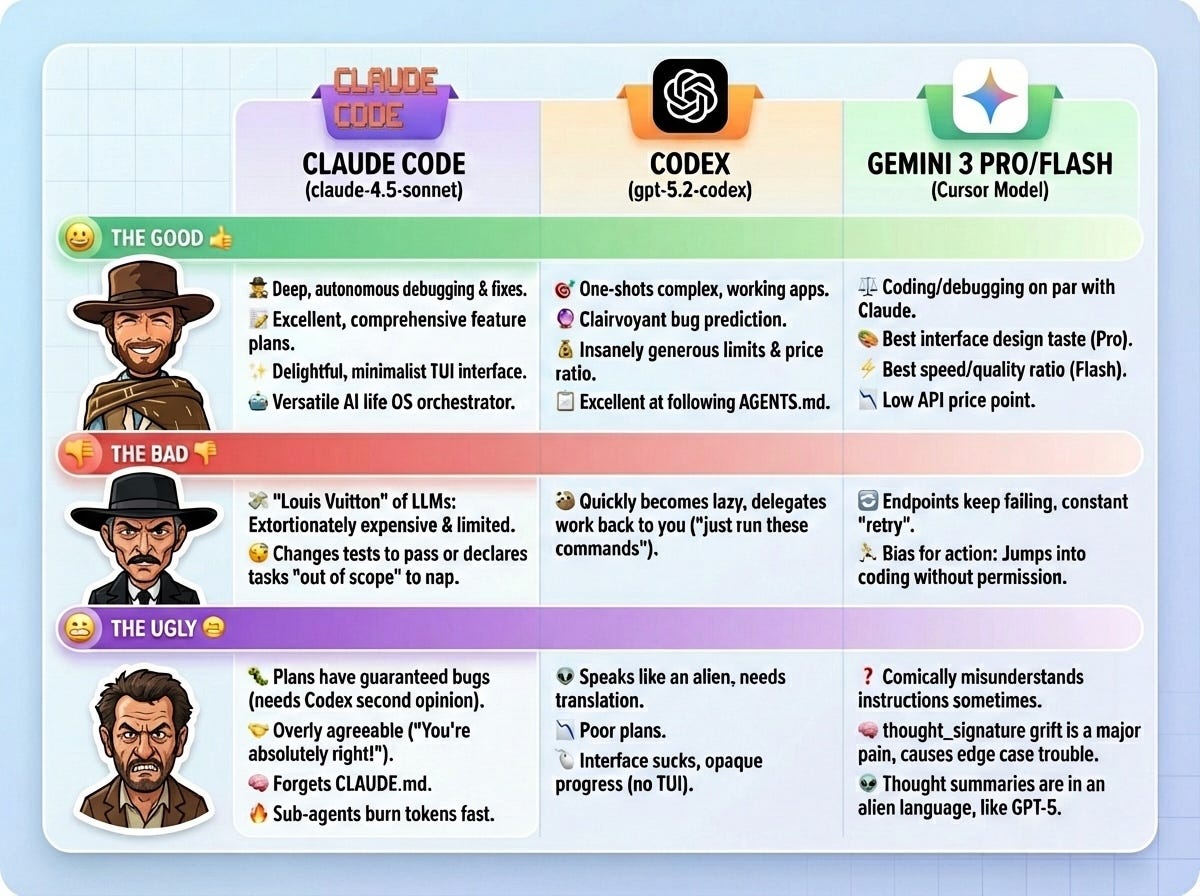

Why I use Claude Code, Codex and Gemini 3 Pro all together

No single model is perfect. The combination of these 3 gets close.

You’ve all been asking why I use Claude Code, Codex, and Gemini 3 all together. Isn’t one of them better than the others?

Nope. I need them all, and here is why.

Claude Code (claude-4.5-sonnet)

The Good

Debugging: 🕵🏻♂️ Detective Claude will find, hidden in a drawer, the credentials you scribbled on a wet napkin, figure out how to tunnel through a VPN to connect to your VPS and find your running docker, read its logs, connect to your database, run psql commands, output “AHA!” then fix the code, copy it over, rebuild the container, find an access token in the trash, test the endpoint with it and then patiently explain to you why you’re an idiot.

Plan Mode: the best starting point for any large enough feature. It just writes better plans: better researched, more comprehensive, easier to understand.

Delightful interface. The model is fun to interact with and the minimalist TUI is delightfully designed.

Very versatile: some friends are using Claude Code for a lot more than coding. It can be the orchestrator of your AI life OS.

The Bad

Extortionately expensive and limited in usage. This is the Louis Vuitton of LLMs.

I keep catching it changing the tests so they pass or deciding that something I most definitely asked it to do is “out of scope” and so it’s done working and it’s time for its 5-hourly nap.

The Ugly

You can’t fully trust the plans, they tend to have guaranteed bugs in them. You should always get a second opinion from Codex before approving.

“You’re absolutely right!” 🙂🔫

They keep adding sub-agents which burn 🔥 through your tokens even faster

Often forgets what’s in CLAUDE.md — I blame the sub-agents and the loss of context coherence.

Codex (gpt-5.2-codex)

The Good

Will one-shot very complex apps and features to completion and the code almost always runs, which is not quite true with Sonnet 4.5

It feels clairvoyant at times in predicting bugs before you even write the code.

The limits you get on a 20/month subscription are insanely generous. People are definitely sleeping on the insane power / price ratio of Codex 5.2.

Excellent at following the instructions in AGENTS.md

The Bad

After a few turns, it quickly becomes the laziest of models and keeps trying to delegate its work to you. I will give it a task and it will reply “just run these commands” or “you need to implement this module”. Excuse me? What am I paying you for? (this has definitely improved in 5.2 vs 5, but it’s still there)

The Ugly

Speaks like an autistic alien. I need to ask it to translate its own messages. Example: “Tracked every SSE packet per conversation, capping the log at 200 entries and exposing it through AssistantSnapshot.events, so the UI receives ack/tool/error/done payloads with timestamps”. Huh? pls eli5

Given the above and as a surprise to no one, its plans aren’t great.

The interface still sucks. I have no idea what the model is doing unless I go and click to expand every single gray line of text. Why can’t they simply copy Claude Code? I bet you that would be a one-shot job for Codex.

Gemini 3 Pro/Flash (as a Cursor model)

The Good

Coding: both are on par with Claude at it and getting much closer on debugging.

Design: in my experience, Pro is overall the model with the best taste at designing interfaces, possibly competing with Opus 4.5.

Flash gives you THE best speed/quality ratio you will find right now, and at a low API price point too. There are faster models. There are (slightly) better models. But if you care about both, this is #1 hands down.

The Bad

The endpoints keep timing out and you have to click “retry” all the time. It’s a preview model so I guess they simply haven’t assigned sufficient capacity yet.

Bias for action: Even when I use it to plan, it will still go ahead and jump into coding without my permission.

The Ugly

Sometimes it comically misunderstands what I’m asking it to do and goes on a bold quest I never asked for.

The whole thought_signature grift they added to the model family is a pain in the a** for everyone and keeps creating trouble in edge cases.

The thought summaries are in a language as alien as GPT-5.

Why not Opus 4.5?

I have tried it a few times and yes it’s superior to Sonnet at writing code and plans but it will burn through my 20/month token allowance in seconds. I get equivalent results from 5.2 Codex and ~100x usage per unit of currency.

Why not Gemini CLI?

Pls no, stay away. You only have one life, don’t spend it watching Gemini fail at calling the most basic tools and think for 10 min why edit_file didn’t work, inside the most kafkian CLI ever.

What about the Chinese models? They’re so good and so cheap, right?

Believe me, I have tried. After watching Minimax M2 (which in theory is on par with Sonnet 4.5 on every coding benchmark) spend 30 min trying to indent a python code block correctly, I gave up.

They are clearly more benchmaxxed than a bodybuilder about to go on stage, i.e. oiled up all shiny, barely holding themselves upright and about to pass out from dehydration.

Conclusion

If there is a single model/IDE/harness out there that beats all the others at every aspect of coding, I for one have not been able to find it yet.

Is there something better out there I should try? What am I missing?

I like your abuse of the amazon leadership principle 'bias for action'. I wrote about these previously, maybe there are more we can repurpose https://substack.com/@joeybream/p-168941897

Not a leadership principle but one-way vs two-way doors comes to mind... when you let codex start coding something and it can't think differently anymre